Hand Interaction

VIVE OpenXR plugin provides VIVE XR Hand Interaction, allowing you to interact with other objects while using hand tracking. Please add it to the Interaction Profiles located at Edit > Project Settings > XR Plug-in Management > OpenXR.

Supported Platforms and Devices

| Platform | Headset | Supported | Plugin Version | |

| PC | PC Streaming | Focus3/ XR Elite | X | |

| Pure PC | Vive Cosmos | X | ||

| Vive Pro series | X | |||

| AIO | Focus3/ XR Elite | V | 2.0.0 and above | |

Specification

VIVE XR Hand Interaction is recommended to be used with hand tracking, either with our VIVE XR Hand Tracking or XR Hands which is released by Unity. Please choose one. If you plan to use it with the XR Interaction Toolkit, XR Hands is recommended.

Environment Settings

-

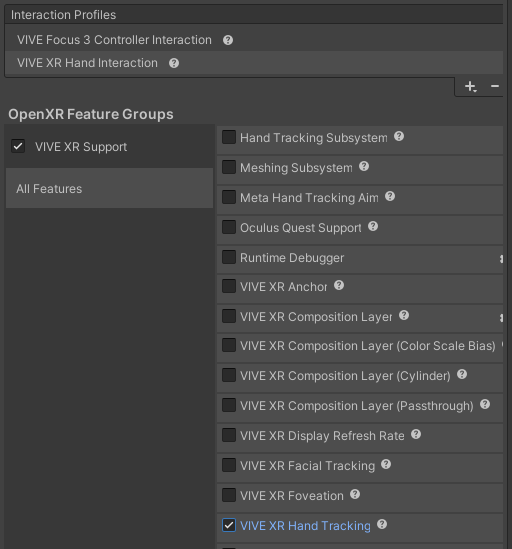

If you do not plan to use the XR Interaction Toolkit, please ensure that VIVE XR Hand Tracking is checked and

VIVE XR Hand Interaction

is added in the settings under

Edit

>

Project Settings

>

XR Plug-in Management

>

OpenXR

.

-

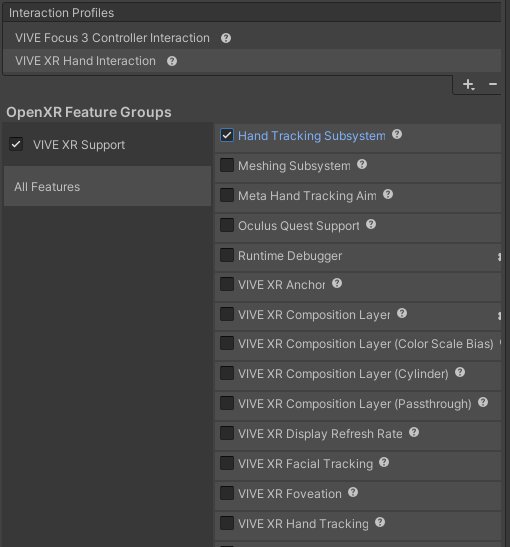

If you plan to use the XR Interaction Toolkit, please ensure that Hand Tracking Subsystem is checked and VIVE XR Hand Interaction is added in the settings under

Edit

>

Project Settings

>

XR Plug-in Management

>

OpenXR

.

Additionally, we provide a verified compatibility chart for different versions of the XR Interaction Toolkit.

| Unity | Input System | OpenXR | XR Plugin Management | XR Hands | XR Interaction Toolkit |

| 2021.3.16 | 1.4.4 | 1.6.0 | 4.4.0 | 1.3.0 | 2.3.1 |

| 2022.3.21 | 1.4.4 | 1.6.0 | 4.4.0 | 1.3.0 | 2.3.1 |

| 2021.3.36 | 1.7.0 | 1.9.1 | 4.4.0 | 1.5.0-pre.3 | 2.5.4 |

| 2022.3.21 | 1.7.0 | 1.9.1 | 4.4.0 | 1.5.0-pre.3 | 2.5.4 |

Golden Sample

Interact with Objects

Basically, Hand Interaction provides poses (including pointer pose and grip pose) and interaction values (including select value and grip value). These variables can be used to determine actions such as long-distance raycasting and close-range grabbing or touching.

The direction of ray uses the “forward” of pointerPose.rotation.

As you can imagine, with the data that Hand Interaction provides, we’re able to use Raycasting to select or interact with objects in XR.

Apply in XR Interaction Toolkit

The XR Interaction Toolkit offers many fully developed interaction features, most of which are controlled using input actions. Therefore, by simply adding VIVE XR Hand Interaction to the XR Interaction Toolkit's input action settings, you can use it within the XR Interaction Toolkit's samples.

-

Please ensure that Hand Tracking Subsystem is checked and VIVE XR Hand Interaction is added in the settings under Edit > Project Settings > XR Plug-in Management > OpenXR.

-

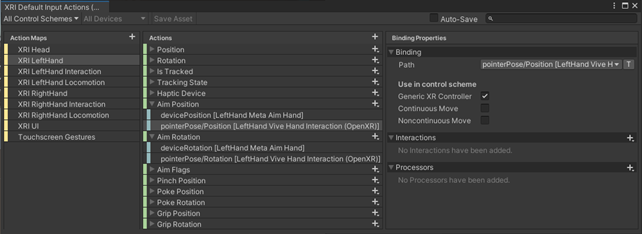

Open XRI Default Input Actions from Assets > Samples > XR Interaction Toolkit > version > Starter Assets.

-

Both XRI LeftHand and XRI RightHand, add pointer/position to Aim Position and pointer/rotation to Aim Rotation.

-

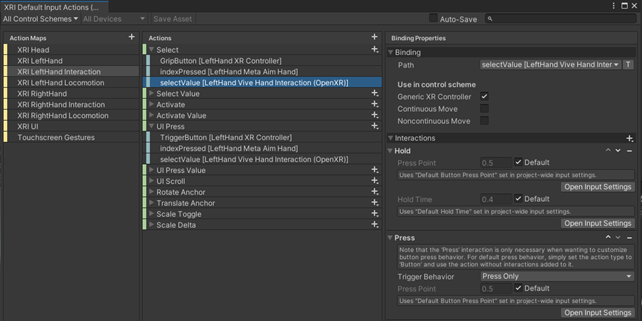

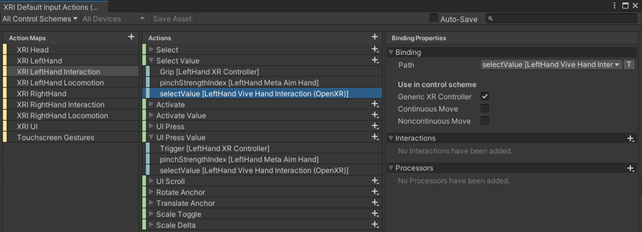

Modify these Input Actions both XRI LeftHand Interaction and XRI RightHand Interaction.

- Set Action Type to Value and Control Type to axis of Select / UI Press.

- Add selectValue to Select / UI Press and add Hold and Press in Interactions.

- Add selectValue to Select Value / UI Press Value.

-

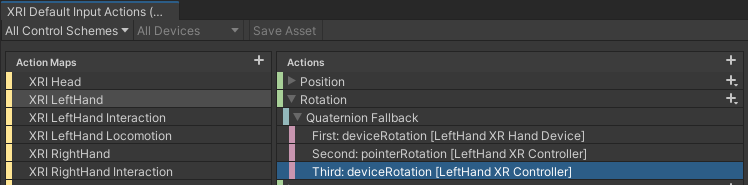

Reorder the input paths to prevent hand tracking data from being overridden by other controller data.

-

Build and run the HandsDemoScene, which is part of the XR Interaction Toolkit's samples. You should be able to raycast, grab, and touch objects.

See Also

-

VIVE XR Hand Interaction extension.