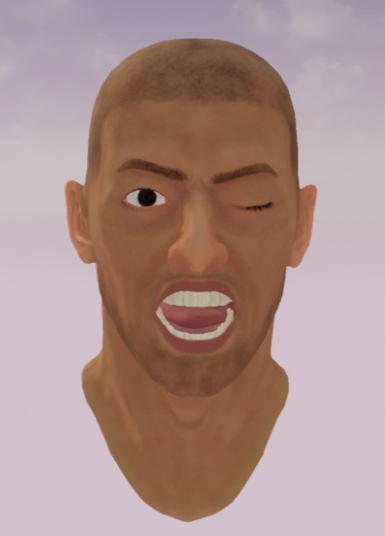

Integrate Facial Tracking Data With Your Avatar

OpenXR Facial Tracking Plugin Setup

Supported Unreal Engine version: 4.26 +

-

Enable Plugins:

- Please enable plugin in Edit > Plugins > Virtual Reality:

-

Disable Plugins:

- The "Steam VR" plugin must be disabled for OpenXR to work.

- Please disable plugin in Edit > Plugins > Virtual Reality:

-

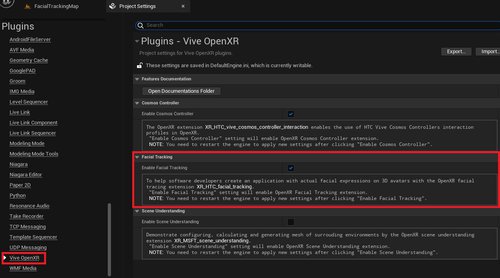

Project Settings:

- Please make sure the “ OpenXR Facial Tracking extension ” is enabled, the setting is in Edit > Project Settings > Plugins > Vive OpenXR > Facial Tracking:

Initial / Release Facial Tracker

If we want to use OpenXR Facial Tracking, we will need to do some steps to make sure the OpenXR Facial Tracking process is fine.

-

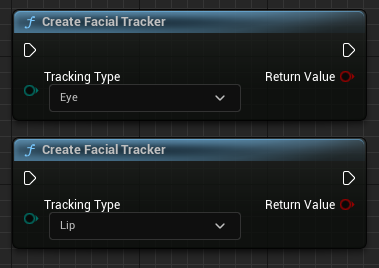

Initial Facial Tracker

-

Create Facial Tracker

- We can pass in (or select) Eye or Lip in the input of this function to indicate the tracking type to be created.

- This function is usually called at the start of the game.

-

Create Facial Tracker

-

-

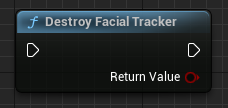

Release Facial Tracker

- Destroy Facial Tracker: This function does not need to select which tracking type need to be released; instead, it will confirm by itself which tracking types need to be released. This function is usually called at the end of the game.

-

Release Facial Tracker

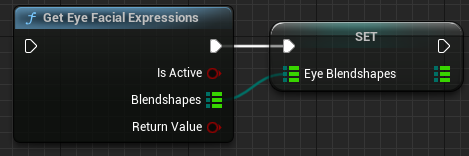

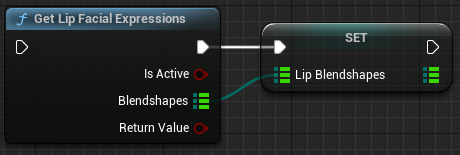

Get Eye / Lip Facial Expressions Data

Getting Detection Result.

Detected Eye or Lip expressions results are available from blueprint function “ GetEyeFacialExpressions ” or “ GetLipFacialExpressions ”.

Feed OpenXR FacialTracking Eye & Lip Data to Avatar

Use data from “ GetEyeFacialExpressions ” or “ GetLipFacialExpressions ” to update Avatar’s eye shape or lip shape.

Note: The Avatar used in this tutorial is the model in the ViveOpenXR sample.

Note: This tutorial will be presented using C++.

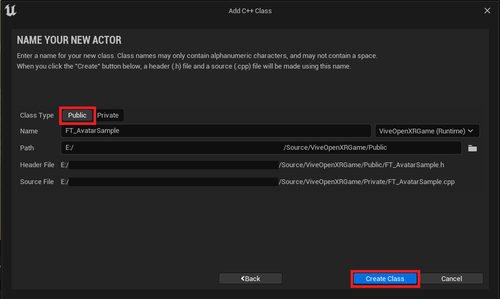

Step1. Essential Setup-

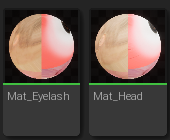

- Import head and eyes skeleton models/textures/materials.

Step2. FT_Framework: Handle initial / release Facial Tracker

-

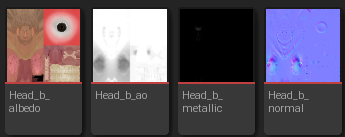

- Create new C++ Class:

- Content Browser > All > C++ Classes > click right mouse button > choose New C++ Class…

-

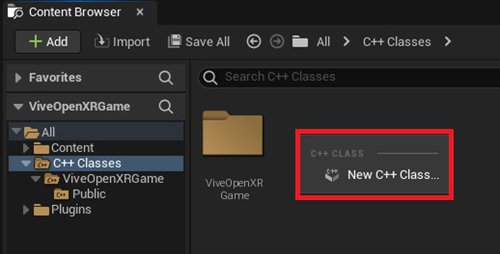

After choose New C++ Class, it will pop out “

Add C++ Class

” window, in CHOOSE PARENT CLASS step:

- Choose “ None ” and press “ Next ”.

-

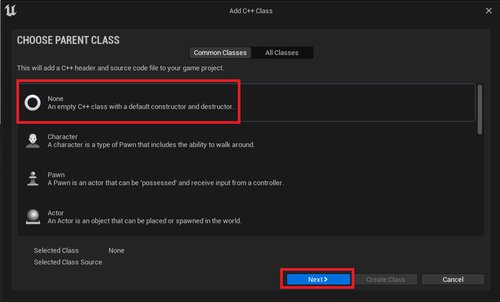

In

NAME YOUR NEW CLASS

step:

- Class Type: Private

- Name: FT_Framework

- After the previous settings are completed, press Create Class .

- FT_ AvatarSample.h : Add the following #include statements at the top.

// Get facial experssion enums from ViveOpenXR plugin. #include "ViveFacialExpressionEnums.h" // These provide the functionality required for ViveOpenXR plugin - Facial Tracking. #include "ViveOpenXRFacialTrackingFunctionLibrary.h"

- FT_ AvatarSample.h : Add the following Properties under public:

public:

// Called every frame

virtual void Tick(float DeltaTime) override;

/** Avatar head mesh. */

UPROPERTY(VisibleDefaultsOnly, Category = Mesh)

USkeletalMeshComponent* HeadModel;

/** Avatar left eye mesh. */

UPROPERTY(VisibleDefaultsOnly, Category = Mesh)

USkeletalMeshComponent* EyeModel_L;

/** Avatar right eye mesh. */

UPROPERTY(VisibleDefaultsOnly, Category = Mesh)

USkeletalMeshComponent* EyeModel_R;

/** Left eye's anchor. */

UPROPERTY(VisibleDefaultsOnly, Category = Anchor)

USceneComponent* EyeAnchor_L;

/** Right eye's anchor. */

UPROPERTY(VisibleDefaultsOnly, Category = Anchor)

USceneComponent* EyeAnchor_R;

/** If true, this Actor will update Eye expressions every frame. */

UPROPERTY(EditAnywhere, Category = FacialTrackingSettings)

bool EnableEye = true;

/** If true, this Actor will update Lip expressions every frame. */

UPROPERTY(EditAnywhere, Category = FacialTrackingSettings)

bool EnableLip = true;

bool isEyeActive = false;

bool isLipActive = false;

- FT_ AvatarSample.h : Add the following Properties under private .

private:

/** ¬We will use this variable to set both eyes anchor. */

TArray<USceneComponent*> EyeAnchors;

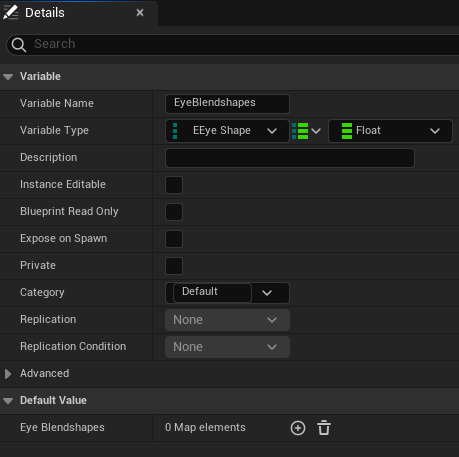

/** ¬We will use this variable to set eye expressions weighting. */

TMap<EEyeShape, float> EyeWeighting;

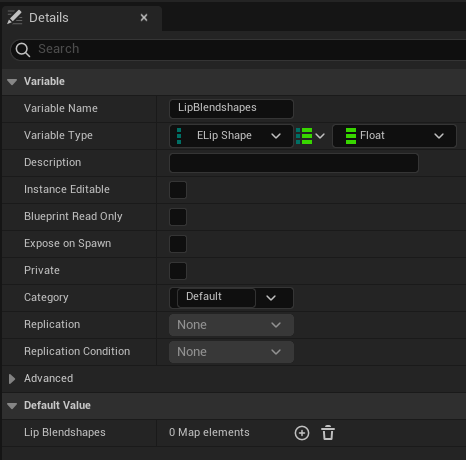

/** ¬We will use this variable to set lip expressions weighting. */

TMap<ELipShape, float> LipWeighting;

/** ¬This TMap variable is used to store the corresponding result of Avatar's eye blend shapes(key) and OpenXRFacialTracking eye expressions(value). */

TMap<FName, EEyeShape> EyeShapeTable;

/** ¬This TMap variable is used to store the corresponding result of Avatar's lip blend shapes(key) and OpenXRFacialTracking lip expressions(value). */

TMap<FName, ELipShape> LipShapeTable;

/** This function will calculate the gaze direction to update the eye's anchors rotation to represent the direction of the eye's gaze. */

void UpdateGazeRay();

FORCEINLINE FVector ConvetToUnrealVector(FVector Vector, float Scale = 1.0f)

{

return FVector(Vector.Z * Scale, Vector.X * Scale, Vector.Y * Scale);

}

/** Render the result of face tracking to the avatar's blend shapes. */

template<typename T>

void RenderModelShape(USkeletalMeshComponent* model, TMap<FName, T> shapeTable, TMap<T, float> weighting);

- FT_ cpp : Add the following #include statements at the top .

#include "FT_AvatarSample.h" #include "FT_Framework.h" #include "Engine/Classes/Kismet/KismetMathLibrary.h"

- AFT_AvatarSample::AFT_AvatarSample() - Definition for the Skeletal Mesh that will serve as our visual representation.

// Sets default values

AFT_AvatarSample::AFT_AvatarSample()

{

PrimaryActorTick.bCanEverTick = true;

RootComponent = CreateDefaultSubobject<USceneComponent>(TEXT("RootSceneComponent"));

// Head

HeadModel = CreateDefaultSubobject<USkeletalMeshComponent>(TEXT("HeadModel"));

HeadModel->SetupAttachment(RootComponent);

// Eye_L

EyeAnchor_L = CreateDefaultSubobject<USceneComponent>(TEXT("EyeAnchor_L"));

EyeAnchor_L->SetupAttachment(RootComponent);

EyeAnchor_L->SetRelativeLocation(FVector(3.448040f, 7.892285f, 0.824235f));

EyeModel_L = CreateDefaultSubobject<USkeletalMeshComponent>(TEXT("EyeModel_L"));

EyeModel_L->SetupAttachment(EyeAnchor_L);

EyeModel_L->SetRelativeLocation(FVector(-3.448040f, -7.892285f, -0.824235f));

// Eye_R

EyeAnchor_R = CreateDefaultSubobject<USceneComponent>(TEXT("EyeAnchor_R"));

EyeAnchor_R->SetupAttachment(RootComponent);

EyeAnchor_R->SetRelativeLocation(FVector(-3.448040f, 7.892285f, 0.824235f));

EyeModel_R = CreateDefaultSubobject<USkeletalMeshComponent>(TEXT("EyeModel_R"));

EyeModel_R->SetupAttachment(EyeAnchor_R);

EyeModel_R->SetRelativeLocation(FVector(3.448040f, -7.892285f, -0.824235f));

EyeAnchors.AddUnique(EyeAnchor_L);

EyeAnchors.AddUnique(EyeAnchor_R);

}

- AFT_AvatarSample::BeginPlay() - Create eye and lip facial tracker.

// Called when the game starts or when spawned

void AFT_AvatarSample::BeginPlay()

{

Super::BeginPlay();

this->SetOwner(GetWorld()->GetFirstPlayerController()->GetPawn());

// CreateFacialTracker_Eye

if (EnableEye)

{

FT_Framework::Instance()->StartEyeFramework();

}

// CreateFacialTracker_Lip

if (EnableLip)

{

FT_Framework::Instance()->StartLipFramework();

}

- AFT_AvatarSample::BeginPlay() - Store the result corresponding to the eye blend shapes(key) of the avatar and OpenXRFacialTracking eye expressions(value) in the EyeShapeTable .

EyeShapeTable = {

{"Eye_Left_Blink", EEyeShape::Eye_Left_Blink},

{"Eye_Left_Wide", EEyeShape::Eye_Left_Wide},

{"Eye_Left_Right",EEyeShape::Eye_Left_Right},

{"Eye_Left_Left",EEyeShape::Eye_Left_Left},

{"Eye_Left_Up", EEyeShape::Eye_Left_Up},

{"Eye_Left_Down", EEyeShape::Eye_Left_Down},

{"Eye_Right_Blink", EEyeShape::Eye_Right_Blink},

{"Eye_Right_Wide", EEyeShape::Eye_Right_Wide},

{"Eye_Right_Right",EEyeShape::Eye_Right_Right},

{"Eye_Right_Left", EEyeShape::Eye_Right_Left},

{"Eye_Right_Up", EEyeShape::Eye_Right_Up},

{"Eye_Right_Down", EEyeShape::Eye_Right_Down},

//{"Eye_Frown", EEyeShape::Eye_Frown},

{"Eye_Left_squeeze", EEyeShape::Eye_Left_Squeeze},

{"Eye_Right_squeeze", EEyeShape::Eye_Right_Squeeze}

};

- AFT_AvatarSample::BeginPlay() - Store the result corresponding to the lip blend shapes(key) of the avatar and OpenXRFacialTracking lip expressions(value) in the LipShapeTable .

LipShapeTable = {

// Jaw

{"Jaw_Right", ELipShape::Jaw_Right},

{"Jaw_Left", ELipShape::Jaw_Left},

{"Jaw_Forward", ELipShape::Jaw_Forward},

{"Jaw_Open", ELipShape::Jaw_Open},

// Mouth

{"Mouth_Ape_Shape", ELipShape::Mouth_Ape_Shape},

{"Mouth_Upper_Right", ELipShape::Mouth_Upper_Right},

{"Mouth_Upper_Left", ELipShape::Mouth_Upper_Left},

{"Mouth_Lower_Right", ELipShape::Mouth_Lower_Right},

{"Mouth_Lower_Left", ELipShape::Mouth_Lower_Left},

{"Mouth_Upper_Overturn", ELipShape::Mouth_Upper_Overturn},

{"Mouth_Lower_Overturn", ELipShape::Mouth_Lower_Overturn},

{"Mouth_Pout", ELipShape::Mouth_Pout},

{"Mouth_Smile_Right", ELipShape::Mouth_Smile_Right},

{"Mouth_Smile_Left", ELipShape::Mouth_Smile_Left},

{"Mouth_Sad_Right", ELipShape::Mouth_Sad_Right},

{"Mouth_Sad_Left", ELipShape::Mouth_Sad_Left},

{"Cheek_Puff_Right", ELipShape::Cheek_Puff_Right},

{"Cheek_Puff_Left", ELipShape::Cheek_Puff_Left},

{"Cheek_Suck", ELipShape::Cheek_Suck},

{"Mouth_Upper_UpRight", ELipShape::Mouth_Upper_UpRight},

{"Mouth_Upper_UpLeft", ELipShape::Mouth_Upper_UpLeft},

{"Mouth_Lower_DownRight", ELipShape::Mouth_Lower_DownRight},

{"Mouth_Lower_DownLeft", ELipShape::Mouth_Lower_DownLeft},

{"Mouth_Upper_Inside", ELipShape::Mouth_Upper_Inside},

{"Mouth_Lower_Inside", ELipShape::Mouth_Lower_Inside},

{"Mouth_Lower_Overlay", ELipShape::Mouth_Lower_Overlay},

// Tongue

{"Tongue_LongStep1", ELipShape::Tongue_LongStep1},

{"Tongue_Left", ELipShape::Tongue_Left},

{"Tongue_Right", ELipShape::Tongue_Right},

{"Tongue_Up", ELipShape::Tongue_Up},

{"Tongue_Down", ELipShape::Tongue_Down},

(承接上一頁)

{"Tongue_Roll", ELipShape::Tongue_Roll},

{"Tongue_LongStep2", ELipShape::Tongue_LongStep2},

{"Tongue_UpRight_Morph", ELipShape::Tongue_UpRight_Morph},

{"Tongue_UpLeft_Morph", ELipShape::Tongue_UpLeft_Morph},

{"Tongue_DownRight_Morph", ELipShape::Tongue_DownRight_Morph},

{"Tongue_DownLeft_Morph", ELipShape::Tongue_DownLeft_Morph}

};

We can find blend shapes in Avatar skeleton mesh asset.

- AFT_AvatarSample::RenderModelShape() - Render the result of face tracking to the avatar's blend shapes.

template<typename T>

void AFT_AvatarSample::RenderModelShape(USkeletalMeshComponent* model, TMap<FName, T> shapeTable, TMap<T, float> weighting)

{

if (shapeTable.Num() <= 0) return;

if (weighting.Num() <= 0) return;

for (auto &table : shapeTable)

{

if ((int)table.Value != (int)EEyeShape::None || (int)table.Value != (int)ELipShape::None)

{

model->SetMorphTarget(table.Key, weighting[table.Value]);

}

}

}

- AFT_AvatarSample::UpdateGazeRay() - This function will calculate the gaze direction to update the eye's anchors rotation to represent the direction of the eye's gaze.

void AFT_AvatarSample::UpdateGazeRay()

{

// Caculate Left eye gaze direction

FVector gazeDirectionCombinedLocal_L;

FVector modelGazeOrigin_L, modelGazeTarget_L;

FRotator lookAtRotation_L, eyeRotator_L;

if (EyeWeighting.Num() <= 0) return;

if (EyeWeighting[EEyeShape::Eye_Left_Right] > EyeWeighting[EEyeShape::Eye_Left_Left])

{

gazeDirectionCombinedLocal_L.X = EyeWeighting[EEyeShape::Eye_Left_Right];

}

else

{

gazeDirectionCombinedLocal_L.X = -EyeWeighting[EEyeShape::Eye_Left_Left];

}

if (EyeWeighting[EEyeShape::Eye_Left_Up] > EyeWeighting[EEyeShape::Eye_Left_Down])

{

gazeDirectionCombinedLocal_L.Y = EyeWeighting[EEyeShape::Eye_Left_Up];

}

else

{

gazeDirectionCombinedLocal_L.Y = -EyeWeighting[EEyeShape::Eye_Left_Down];

}

gazeDirectionCombinedLocal_L.Z = 1.0f;

modelGazeOrigin_L = EyeAnchors[0]->GetRelativeLocation();

modelGazeTarget_L = EyeAnchors[0]->GetRelativeLocation() + gazeDirectionCombinedLocal_L;

(承接上一頁)

lookAtRotation_L = UKismetMathLibrary::FindLookAtRotation(ConvetToUnrealVector(modelGazeOrigin_L), ConvetToUnrealVector(modelGazeTarget_L));

eyeRotator_L = FRotator(lookAtRotation_L.Roll, lookAtRotation_L.Yaw, -lookAtRotation_L.Pitch);

EyeAnchors[0]->SetRelativeRotation(eyeRotator_L);

// Caculate Right eye gaze direction

FVector gazeDirectionCombinedLocal_R;

FVector modelGazeOrigin_R, modelGazeTarget_R;

FRotator lookAtRotation_R, eyeRotator_R;

if (EyeWeighting[EEyeShape::Eye_Right_Left] > EyeWeighting[EEyeShape::Eye_Right_Right])

{

gazeDirectionCombinedLocal_R.X = -EyeWeighting[EEyeShape::Eye_Right_Left];

}

else

{

gazeDirectionCombinedLocal_R.X = EyeWeighting[EEyeShape::Eye_Right_Right];

}

if (EyeWeighting[EEyeShape::Eye_Right_Up] > EyeWeighting[EEyeShape::Eye_Right_Down])

{

gazeDirectionCombinedLocal_R.Y = EyeWeighting[EEyeShape::Eye_Right_Up];

}

else

{

gazeDirectionCombinedLocal_R.Y = -EyeWeighting[EEyeShape::Eye_Right_Down];

}

gazeDirectionCombinedLocal_R.Z = 1.0f;

modelGazeOrigin_R = EyeAnchors[0]->GetRelativeLocation();

modelGazeTarget_R = EyeAnchors[0]->GetRelativeLocation() + gazeDirectionCombinedLocal_R;

(承接上一頁)

lookAtRotation_R = UKismetMathLibrary::FindLookAtRotation(ConvetToUnrealVector(modelGazeOrigin_R), ConvetToUnrealVector(modelGazeTarget_R));

eyeRotator_R = FRotator(lookAtRotation_R.Roll, lookAtRotation_R.Yaw, -lookAtRotation_R.Pitch);

EyeAnchors[1]->SetRelativeRotation(eyeRotator_R);

- AFT_AvatarSample::Tick() - Update avatar’s Eye and Lip shapes every frame and update eyes gaze direction.

// Called every frame

void AFT_AvatarSample::Tick(float DeltaTime)

{

Super::Tick(DeltaTime);

//Update Eye Shapes

if (EnableEye)

{

UViveOpenXRFacialTrackingFunctionLibrary::GetEyeFacialExpressions(isEyeActive, EyeWeighting);

RenderModelShape(HeadModel, EyeShapeTable, EyeWeighting);

UpdateGazeRay();

}

//Update Lip Shapes

if (EnableLip)

{

UViveOpenXRFacialTrackingFunctionLibrary::GetLipFacialExpressions(isLipActive, LipWeighting);

RenderModelShape(HeadModel, LipShapeTable, LipWeighting);

}

}

- AFT_AvatarSample::EndPlay() - Release Eye and Lip Facial Tracker.

void AFT_AvatarSample::EndPlay(const EEndPlayReason::Type EndPlayReason)

{

Super::EndPlay(EndPlayReason);

// DestroyFacialTracker_Eye

FT_Framework::Instance()->StopAllFramework();

FT_Framework::Instance()->DestroyFramework();

EyeShapeTable.Empty();

LipShapeTable.Empty();

}

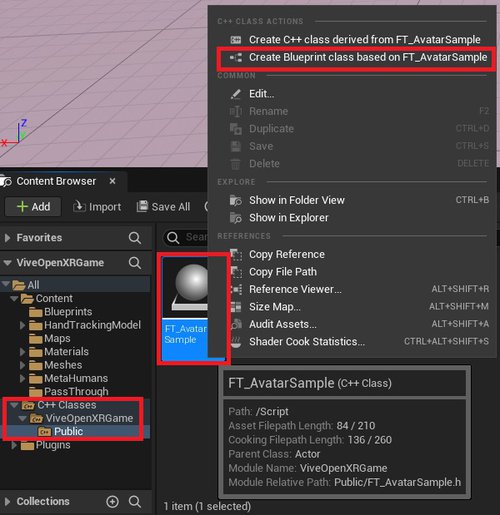

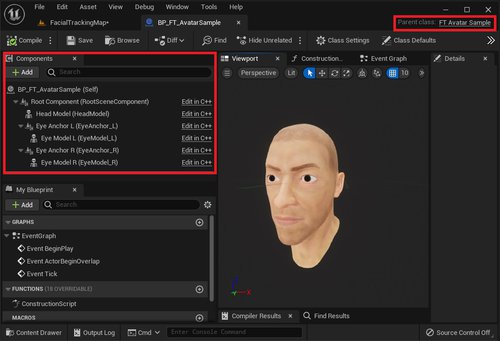

- Create Blueprint class based on FT_AvatarSample : Content Browser > All > C++ Classes > Public > click right button on FT_AvatarSample > choose Create Blueprint class base on FT_AvatarSample

-

-

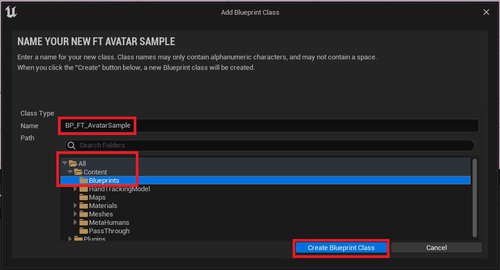

In NAME YOUR NEW FT AVATAR SAMPLE step:

- Name: BP_FT_AvatarSample

- Path: All > Content > Blueprints

- After the previous settings are completed, press Create Blueprint Class .

-

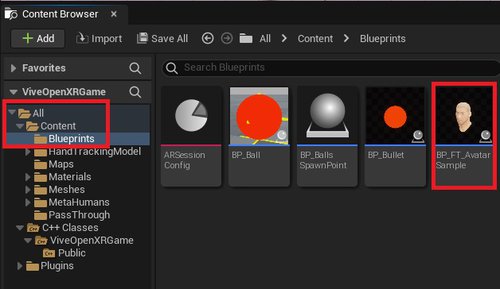

- After Blueprint class is created: Open the blueprint in All > Content > Blueprints , we will find the BP_FT_AvatarSample .

-

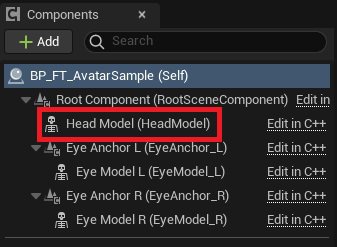

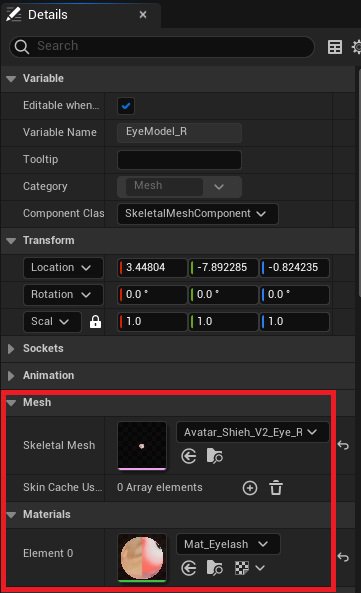

- After opening it, confirm that Parent class is FT_AvatarSample . You can see that there are components from the C++ parent in the Component panel

-

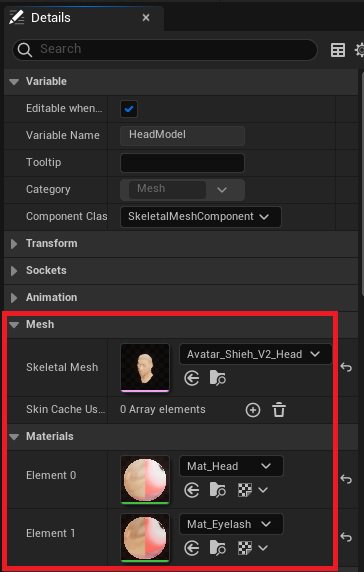

- Set Hand Skeletal Mesh in HeadModel > Details panel > Mesh > Skeletal Mesh.

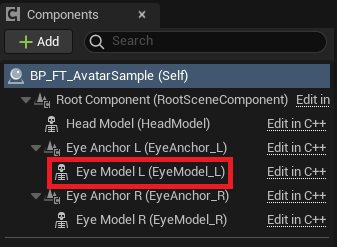

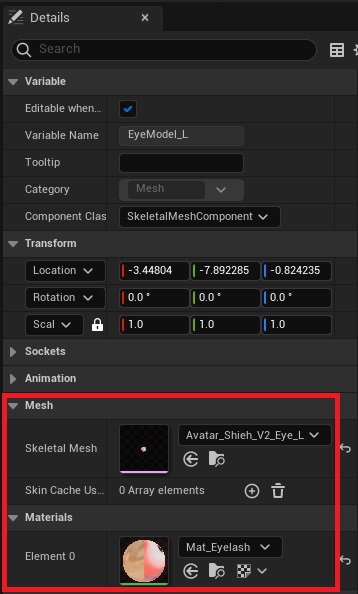

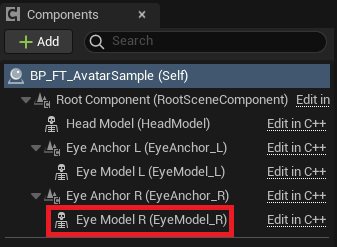

- Set Left Eye Skeletal Mesh in EyeModel_L > Details panel > Mesh > Skeletal Mesh.

- Set Right Eye Skeletal Mesh in EyeModel_R > Details panel > Mesh > Skeletal Mesh.

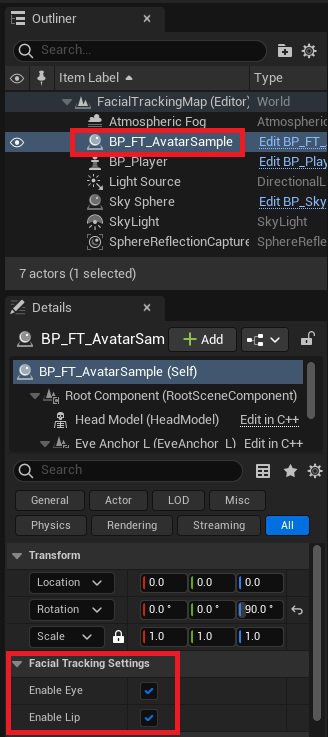

- Go back to Level Editor Default Interface, we will drag the “ BP_FT_AvatarSample ” into Level. You can decide if you need to enable or disable Eye and Lip in: Outliner panel > click BP_FT_AvatarSample > Details panel > Facial Tracking Settings > Enable Eye / Enable Lip .

Result