MetaHuman

Introduction

MetaHuman assists developers to create content with actual facial expressions. (more)

Supported Platforms and devices

| Platform | Headset | Supported | Plugin Version | |

| PC | PC Streaming | Focus 3/XR Elite/Focus Vision | V | 2.0.0 and above |

| Pure PC | Vive Cosmos | X | ||

| Vive Pro series | V | 2.0.0 and above | ||

| AIO | Focus 3/XR Elite/Focus Vision | V | 2.0.0 and above | |

Sample Project

Full sample project is available on GitHub.

ViveOpenXR MetaHuman Sample

ViveOpenXR assets for MetaHuman

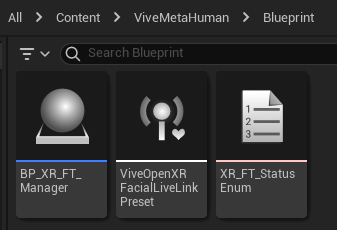

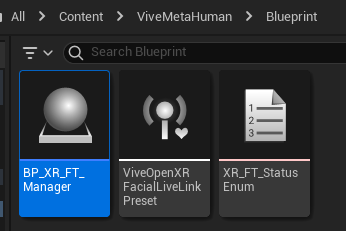

- Inside ViveMetaHuman > Blueprint, includes a Manager Actor, LiveLinkPreset and Enumeration.

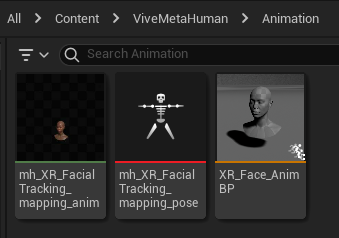

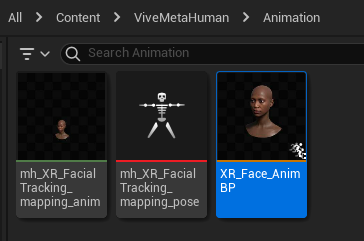

- Inside ViveMetaHuman > Animation, includes a Sequence, Pose Asset and Animation Blueprint.

Enable Plugins

- Edit > Plugins > Search for OpenXR and ViveOpenXR, and make sure they are enabled.

- Edit > Plugins > Built-in > Virtual Reality > OpenXREyeTracker, enable Eye tracker.

- Note that the " SteamVR " and " OculusVR " plugin must be disabled for OpenXR to work.

- Restart the engine for the changes to take effect.

Integrate VIVE OpenXR Facial Tracking with MetaHuman

- Make sure the ViveOpenXR plugin is already installed, along with the OpenXREyeTracker enabled.

- Select Edit > Project Settings > Plugins > Vive OpenXR > Enable Facial Tracking under Facial Tracking to enable OpenXR Facial Tracking extension.

-

Import your MetaHuman.

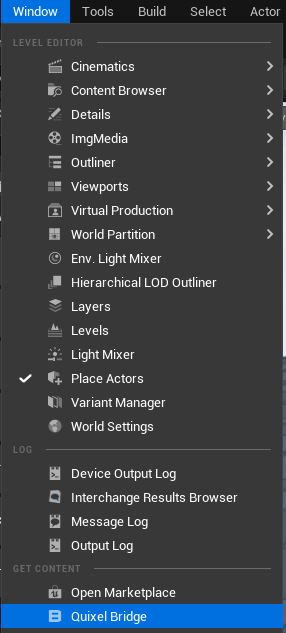

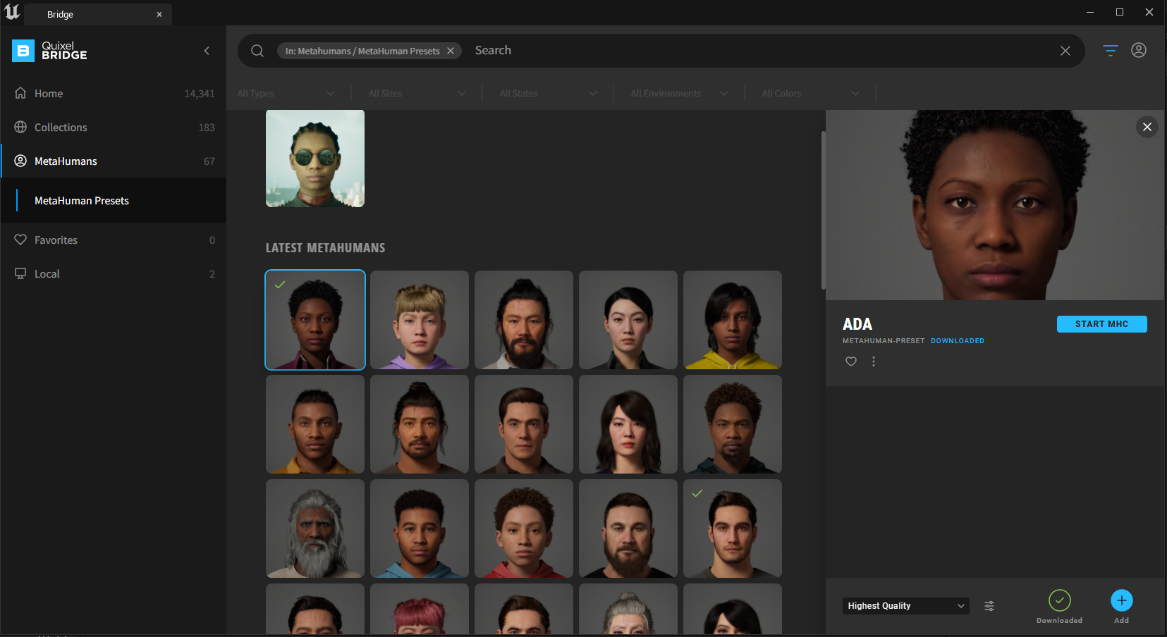

i. Inside Window dropdown menu, open the Quixel Bridge.

ii. Pick a MetaHuman you like, download it and add to your project.

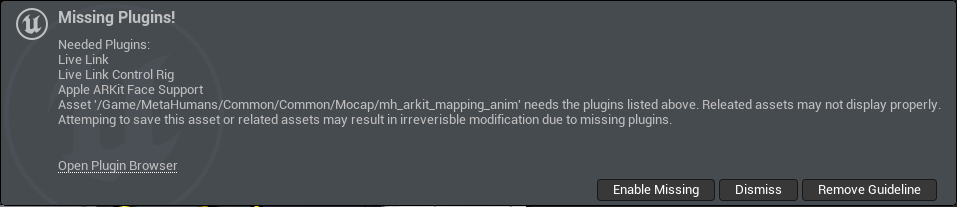

iii. After importing the MetaHuman, some guidelines will pop out.

Ignore the one that includes the Apple ARKit Facial support, press Dismiss.

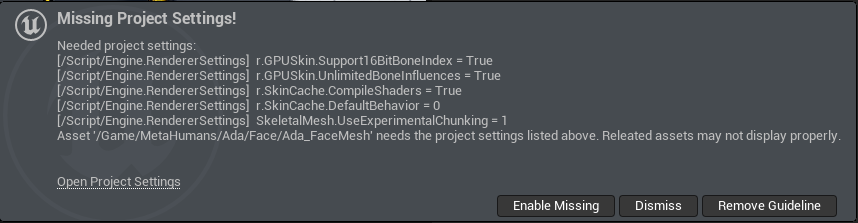

Enabled the Render Settings, Press Enable Missing.

Enabled the Groom plugin, Press Enable Missing.

-

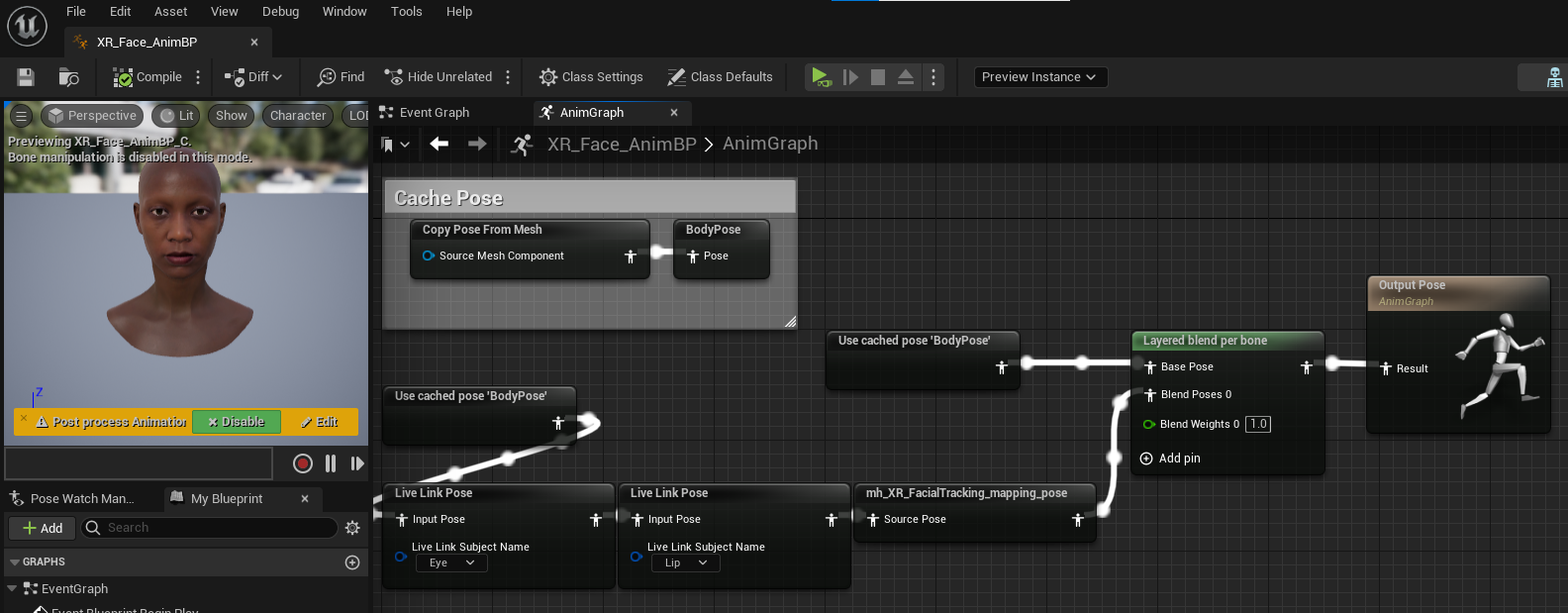

Under ViveMetaHuman > Animation, double click on the animation blueprint XR_Face_AnimBP.

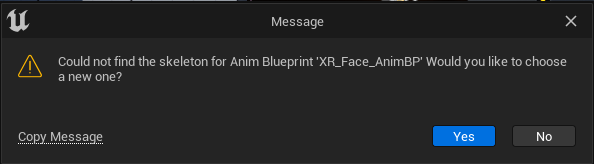

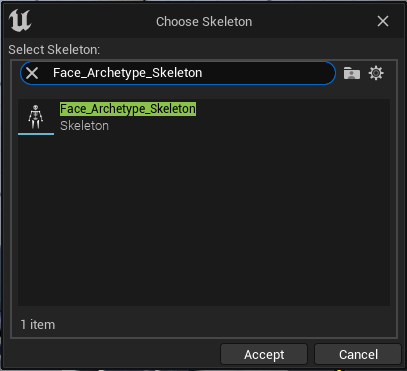

If there is a following pop up window says Could not find skeleton, click Yes to link your MetaHuman face skeleton to the animation blueprint.

Then select the Face_Archetype_Skeleton.

-

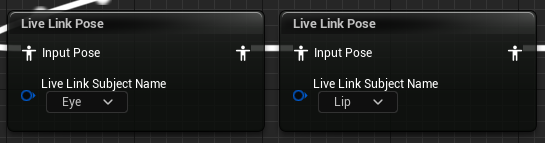

Open up the Animation Blueprint AnimGraph and check if the Live Link Pose is set to Eye and Lip.

-

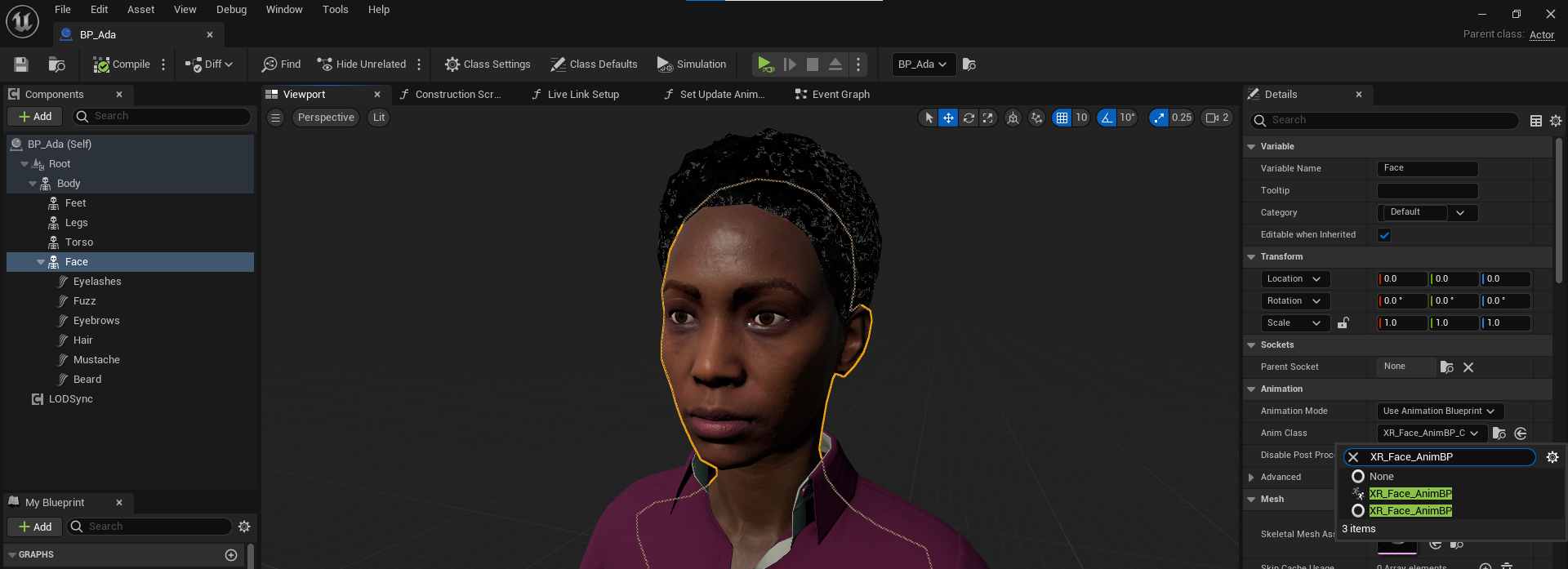

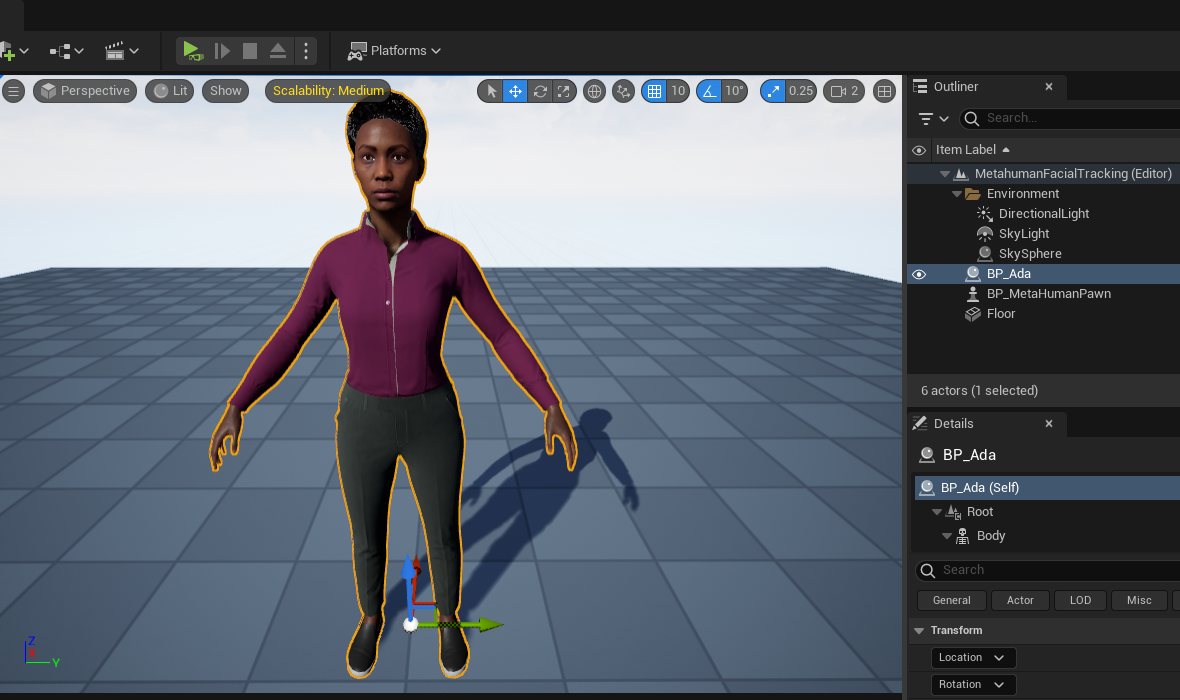

Open up BP_Ada(Your MetaHuman name) under MetaHuman > Ada(Your MetaHuman name folder).

-

Change the Face Anim Class to XR_Face_AnimBP.

-

Drag your MetaHuman into the map.

-

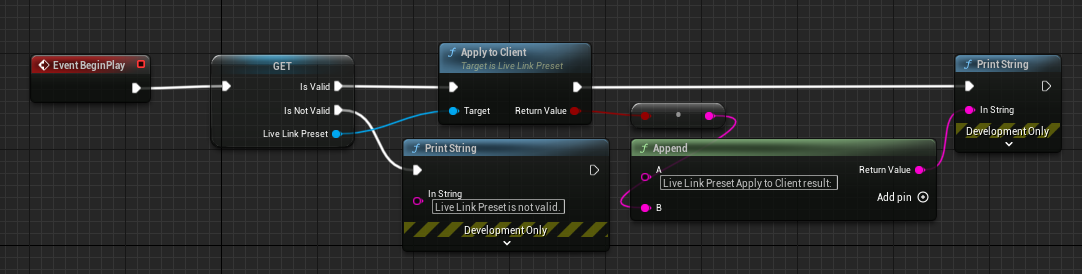

Next drag the BP_XR_FT_Manager under ViveMetaHumant > Blueprint into the map.

BP_XR_FT_Manager will pass the ViveOpenXRFacialLiveLinkPreset to Live Link Client.

-

With all the facial tracking device connected, press play to see the result.

v

v