Hand Tracking (Joint Pose)

In previous chapters, we discussed how to use the controller as the input to interact with objects in XR. However, as a developer, I think it is important for us to create contents as immersive as possible. I mean, wasn’t it the first reason that drove us to the field of XR? — To create content which the player can interact with in person, instead of indirectly via an avatar like they do in other media, such as PCs or consoles.

Supported Platforms and Devices

| Platform | Headset | Supported | Plugin Version | |

| PC | PC Streaming | Focus 3/XR Elite/Focus Vision | V | 2.0.0 and above |

| Pure PC | Vive Cosmos | V | 2.0.0 and above | |

| Vive Pro series | V | 2.0.0 and above | ||

| AIO | Focus 3 | V | 2.0.0 and above | |

| XR Elite | V | 2.0.0 and above | ||

| Focus Vision | V | 2.0.0 and above | ||

Specification

This chapter will explore how to create more immersive experiences using the Hand Tracking and Interaction features within the VIVE XR Hand Tracking extensions.

Environment Settings

Currently, the Unity Editor doesn’t provide a default Hand Tracking interface. Therefore, in this chapter, we’ll be using the VIVE XR HandTracking extension to use Hand Tracking. Before starting, remember to check if your development environment meets the following requirements.

Step 1. Check your VIVE OpenXR Plugin package version Go to Window > Package Manager, the VIVE OpenXR Plugin version should be 2.0.0 or newer.

Step 2. Enable the Hand Tracking feature Go to Project Settings > XR Plug-In Management > OpenXR and enable VIVE XR Hand Tracking.

![]()

Golden Sample

See Your Hand In XR

In this chapter, I am going to teach you how to use VIVE OpenXR Hand Tracking, for I believe it is simpler, more efficient and covers most user scenarios. However, if you’re interested in learning the API design of the original plug-in, go check API_Reference

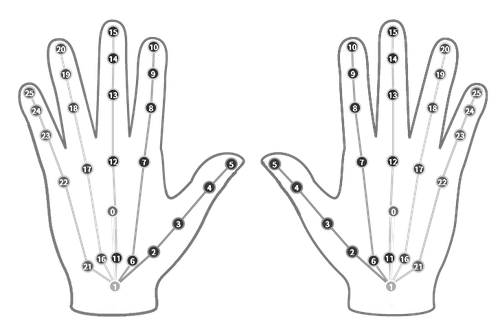

VIVE OpenXR Hand Tracking, defines 26 joints of each hand as seen below.

Each joint contains useful information, such as tracking status, position, and rotation.

Step 1: Create a Rig

1.1 In the editor, create a basic rig like this.

![]()

Remember to attach the TrackedPoseDriver onto the Head, as mentioned in the previous chapter.

1.2 Create two empty GameObjects named LeftHand and RightHand under Rig.

![]()

The hand joints will be placed in these two GameObjects.

Step 2. Create a Joint prefab

The joint prefab represents the pose of a joint.

First, create a script called Joint_Movement.cs.

In Joint_Movement.cs, first, we add two namespaces.

using VIVE.OpenXR;

using VIVE.OpenXR.Hand;

These two namespaces allow us to use the VIVE OpenXR Hand Tracking.

The two variables, jointNum and isLeft, tell the script which joint of which hand it should be tracking.

public int jointNum;

public bool isLeft;

In Update(), we’ll retrieve the joint poses from XR_EXT_hand_tracking.Interop.GetJointLocations().

if (!XR_EXT_hand_tracking.Interop.GetJointLocations(isLeft, out XrHandJointLocationEXT[] handJointLocation)) { return; }

Full script:

using System;

using System.Collections.Generic;

using UnityEngine;

using VIVE.OpenXR.Hand;

namespace VIVE.OpenXR.Samples.Hand

{

public class Joint_Movement : MonoBehaviour

{

public int jointNum = 0;

public bool isLeft = false;

[SerializeField] List<GameObject> Childs = new List<GameObject>();

private Vector3 jointPos = Vector3.zero;

private Quaternion jointRot = Quaternion.identity;

public static void GetVectorFromOpenXR(XrVector3f xrVec3, out Vector3 vec)

{

vec.x = xrVec3.x;

vec.y = xrVec3.y;

vec.z = -xrVec3.z;

}

public static void GetQuaternionFromOpenXR(XrQuaternionf xrQuat, out Quaternion qua)

{

qua.x = xrQuat.x;

qua.y = xrQuat.y;

qua.z = -xrQuat.z;

qua.w = -xrQuat.w;

}

void Update()

{

if (!XR_EXT_hand_tracking.Interop.GetJointLocations(isLeft, out XrHandJointLocationEXT[] handJointLocation)) { return; }

bool poseTracked = false;

if (((UInt64)handJointLocation[jointNum].locationFlags & (UInt64)XrSpaceLocationFlags.XR_SPACE_LOCATION_ORIENTATION_TRACKED_BIT) != 0)

{

GetQuaternionFromOpenXR(handJointLocation[jointNum].pose.orientation, out jointRot);

transform.rotation = jointRot;

poseTracked = true;

}

if (((UInt64)handJointLocation[jointNum].locationFlags & (UInt64)XrSpaceLocationFlags.XR_SPACE_LOCATION_POSITION_TRACKED_BIT) != 0)

{

GetVectorFromOpenXR(handJointLocation[jointNum].pose.position, out jointPos);

transform.localPosition = jointPos;

poseTracked = true;

}

ActiveChilds(poseTracked);

}

void ActiveChilds(bool _SetActive)

{

for (int i = 0; i < Childs.Count; i++)

{

Childs[i].SetActive(_SetActive);

}

}

}

}

Second, create a simple prefab (a simple ball or cube) named Joint, and then attach the Joint_Movement script to it.

![]()

Step 3. Generate the Hand

We’ll use the Joint prefab created in step 4 to generate the hand model.

Create a second script and name it Show_Hand.cs.

using UnityEngine;

namespace VIVE.OpenXR.Samples.Hand

{

public class Show_Hand : MonoBehaviour

{

public GameObject jointPrefab;

public Transform leftHand;

public Transform rightHand;

void Start()

{

GameObject temp;

for (int i = 0; i < 26; i++)

{

temp = Instantiate(jointPrefab, leftHand);

temp.GetComponent<Joint_Movement>().isLeft = true;

temp.GetComponent<Joint_Movement>().jointNum = i;

}

for (int i = 0; i < 26; i++)

{

temp = Instantiate(jointPrefab, rightHand);

temp.GetComponent<Joint_Movement>().isLeft = false;

temp.GetComponent<Joint_Movement>().jointNum = i;

}

}

}

}

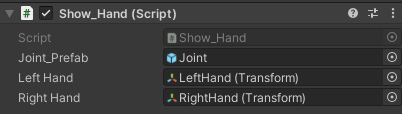

Then, attach this script to any GameObject in the scene and remember to assign the variables in the Inspector window.

In this script, what we’re doing is simply spawning 26 joints for both hands.

Now, we are ready to go. Let’s build and run this app and see your hands in the XR world.

For a more detailed tutorial, watch this video: Hand Tracking Tutorial Video

Use XR Hands

VIVE OpenXR Hand Tracking also support Unity XR Hands, if you want to get joint poses from Unity XR Hands, you can also refer to the Access hand data section of Unity XR Hands to get joint poses. However, please note that when selecting features, you should enable VIVE XR Hand Tracking instead of Hand Tracking Subsystem.

![]()

See Also

Open source project

- VIVE XR Hand Tracking extension.

- VIVE XR Hand Interaction