Integrate VIVE OpenXR Facial Tracking with MetaHuman

OpenXR Facial Tracking Plugin Setup

Supported Unreal Engine version: 4.26 +

-

Enable Plugins:

- Please enable plugin in Edit > Plugins > Virtual Reality

-

Disable Plugins:

- The "Steam VR" plugin must be disabled for OpenXR to work.

-

- Please disable plugin in Edit > Plugins > Virtual Reality

-

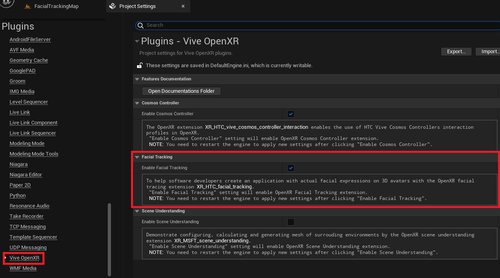

Project Settings:

- Please make sure the “ OpenXR Facial Tracking extension ” is enabled, the setting is in Edit > Project Settings > Plugins > Vive OpenXR > Facial Tracking

Initial / Release Facial Tracker

If we want to use OpenXR Facial Tracking, we will need to do some steps to make sure the OpenXR Facial Tracking process is fine.

-

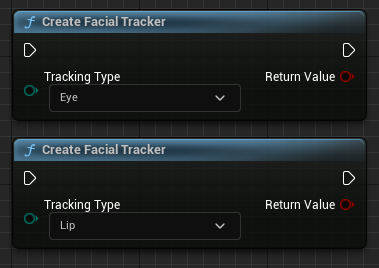

Initial Facial Tracker

-

Create Facial Tracker

- This function is usually called at the start of the game. We can pass in (or select) Eye or Lip in the input of this function to indicate the tracking type to be created.

-

Create Facial Tracker

-

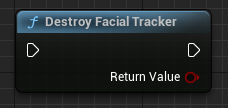

Destroy Facial Tracker

- This function is usually called at the end of the game, it does not need to select which tracking type need to be released, it will confirm by itself which tracking types need to be released.

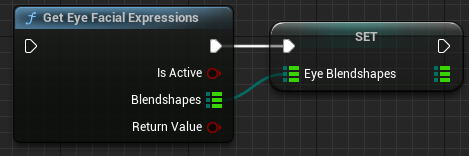

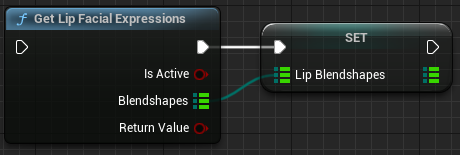

Get Eye / Lip Facial Expressions Data

Getting Detection Result.

Detected Eye or Lip expressions results are available from blueprint function “ GetEyeFacialExpressions ” or “ GetLipFacialExpressions ”.

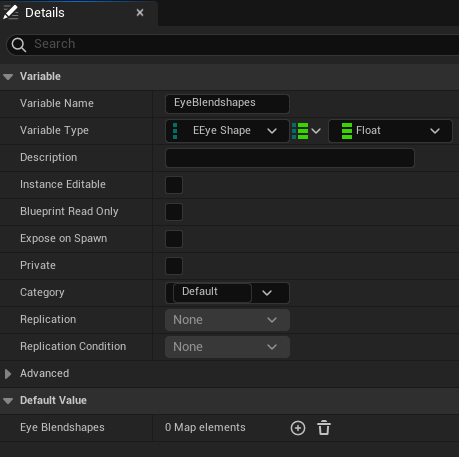

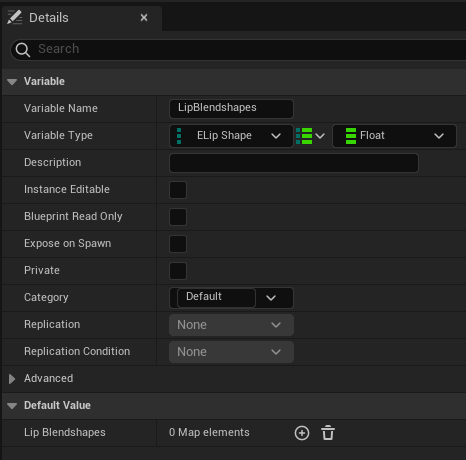

Feed OpenXR FacialTracking Data to MetaHuman

Update data from “ GetEyeFacialExpressions ” or “ GetLipFacialExpressions ” to MetaHuma’s facial expressions.

Note: This tutorial will be presented using Blueprint and Animation Blueprint.

-

Essential Setup

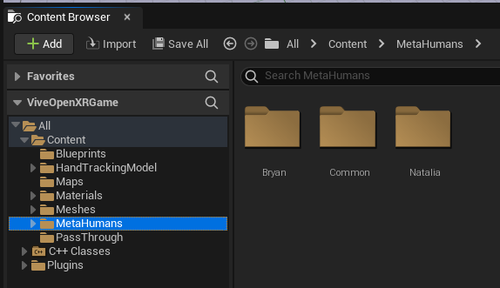

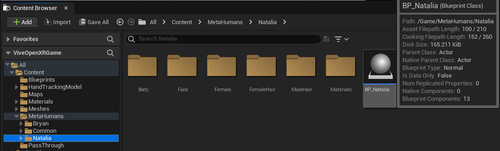

- Follow the MetaHuman Creator – Unreal Engine to import your MetaHuman.

|

MetaHuman folder structure: All > Content > MetaHumans > “Your MetaHuman”. |

-

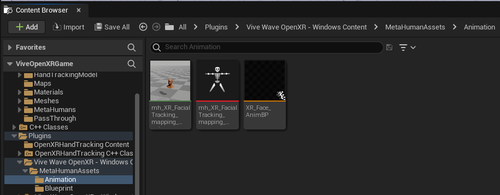

Make sure

Plugins

>

Vive Wave OpenXR – Windows Content

>

MetaHumanAssets

include the following

Animation

and

Blueprint

assets:

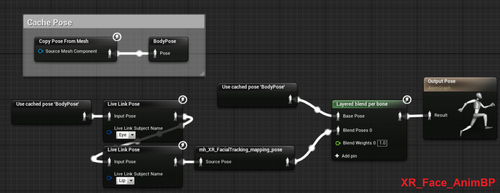

- XR_Face_AnimBP

- mh_XR_FacialTracking_mapping_pose

- mh_XR_FacialTracking_mapping_anim

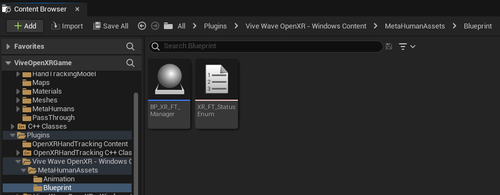

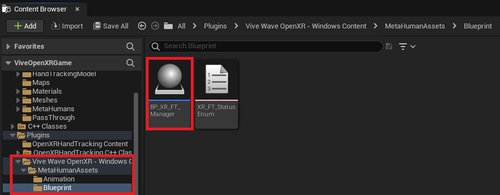

- BP_XR_FT_Manager

- XR_FT_StatusEnum

-

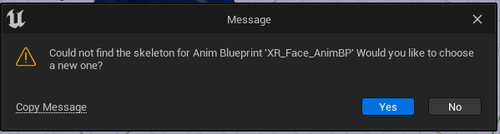

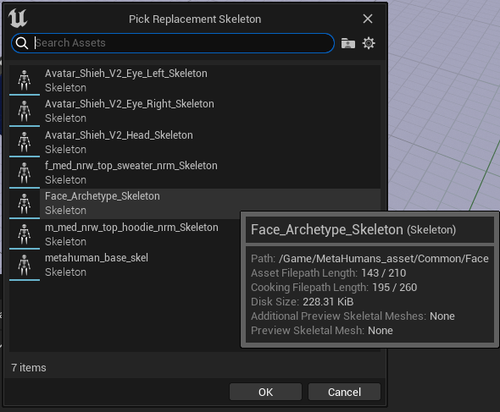

When you finish importing your MetaHuman, you may find that the three anim-related assets under the Animation folder we provide will need to do the steps of Retarget Skeleton. If you have this requirement, please follow the following steps:

Note : Take XR_Face_AnimBP as an example, the steps for the other two assets are the same.

- Double click XR_Face_AnimBP , click Yes when the Message Window pop up.

- Choose “ Face_Archetype_Skeleton ” and click OK (This is the Skeleton of MetaHuman.)

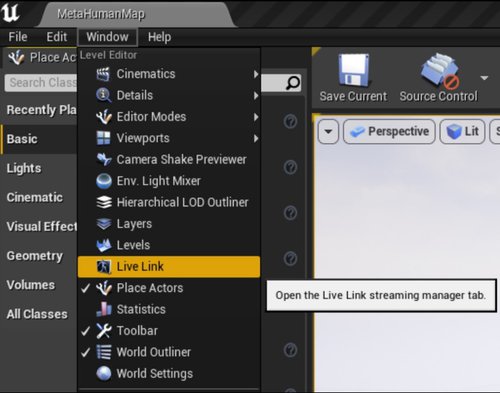

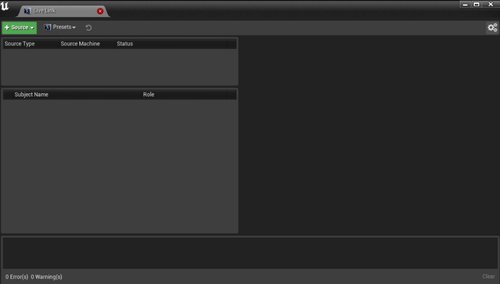

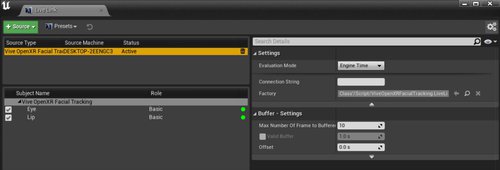

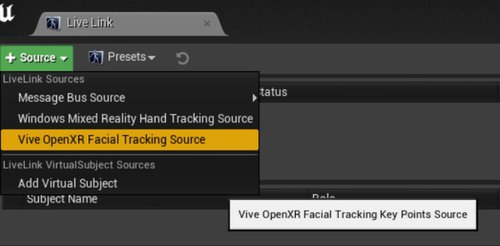

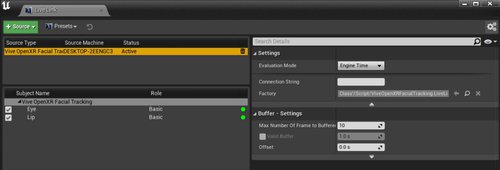

Step2. How to add Vive OpenXR Facial Tracking Live Link

- Open Live Link Panel

- Add Vive OpenXR Facial Tracking Source

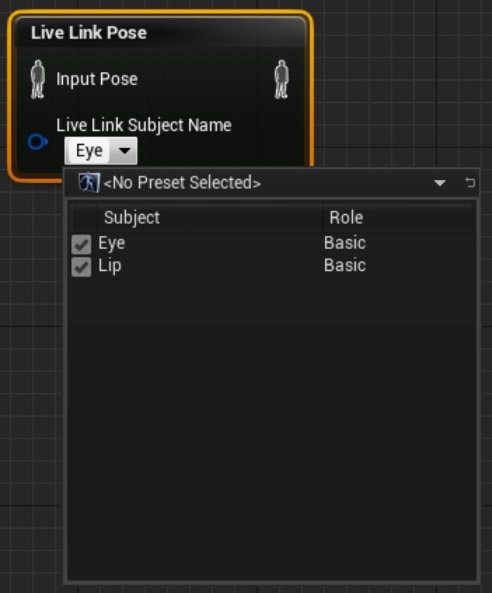

- In XR_Face_AnimBP’s AnimGraph , we will call “ Live Link Pose ” and set the LiveLinkSubjectName to Eye/Lip , and we will use the Live Link Pose node to control MetaHuman’s pose.

- Note: If you do not add the ViveOpenXRFacialTrackingSource in the Live Link panel, you will not see Eye/Lip in the LiveLinkSubjectName list.

-

-

You can find

Your MetaHuman Blueprint

in:

-

- All > Content > MetaHuman > “ Your MetaHuman ” > “ BP_YourMetaHuman ”

-

-

You can find

Your MetaHuman Blueprint

in:

-

Open “BP_YourMetaHuman”

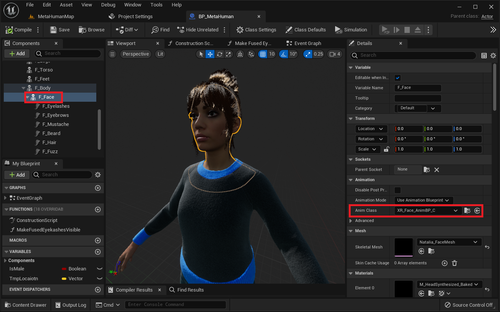

- In Components panel click “ Face ” > Details panel > Animation > Anim Class: XR_Face_AnimBP

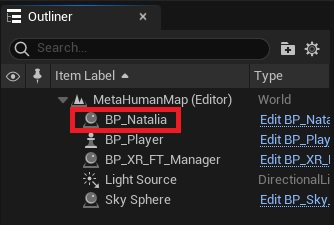

- Go back to Level Editor default Interface, we will drag the “ BP_YourMetaHuman ” into Level.

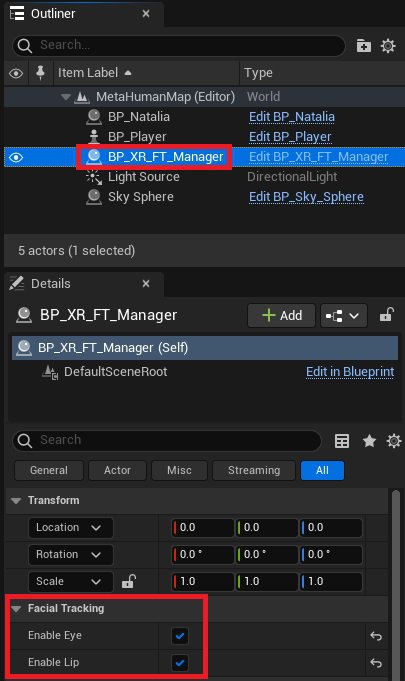

- Drag the BP_XR_FT_Manager into Level

- BP_XR_FT_Manager will Handle Initial / Release Facial Tracker.

- Note: This blueprint functions are the same as the “ FT_Framework ” in the AvatarSample tutorial.

-

Drag BP_XR_FT_Manager into the level:

Plugins > Vive Wave OpenXR – Windows Content > MetaHumanAssets > Blueprint > BP_XR_FT_Manager

- You can decide if you need to enable or disable Eye and Lip in Outliner panel > click BP_XR_FT_Manager > Details panel > Facial Tracking > Enable Eye / Enable Lip

|

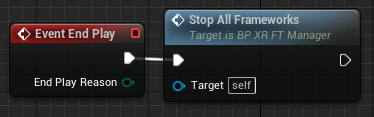

Briefly introduce the functions process of BP_XR_FT_Manager . - We have created StartEyeFramework and StartLipFramework for initial eye and lip facial tracker. - We have created StopAllFrameworks for release eye and lip facial tracker. |

Result